Welcome to the November 4 -10, 2018 edition of the Office 365 Weekly Digest.

Last week, ten features were added to the Office 365 Roadmap, most for SharePoint Online with others for Teams, Microsoft Bookings and Outlook. Also of note is Google G Suite to Office 365 migration that will allow for direct migration of email, contacts and calendar from Google G Suite to Office 365.

The Teams trend continues with more events over the next few weeks. New this week is a Power BI webinar on improving your data modeling skills. The Windows 10, Version 1809 webinar and Ask Microsoft Anything events have been rescheduled to November 28th and December 13th, respectively. Details are provided in the "Upcoming Events" section below.

Blog posts in last week's roundup include a round up of what's new in Teams, migrating content to Microsof Teams using the SharePoint Migration Tool, a recap of OneDrive Message Center updates for the second half of October, updates for Microsoft Flow, PowerApps and AI features in Microsoft Word.

Noteworthy item highlights include a new Outlook Mobile Customer Adoption Pack, the October 2018 release details for Office 365 on Windows Desktop, and the latest Microsoft Mechanics episode detailing the steps of Modern Desktop Deployment. In addition, information on the end of extended support for SharePoint Server 2010 is provided, along with several options for migrating to the cloud or to the latest on-premises version of SharePoint.

OFFICE 365 ROADMAP

Below are the items added to the Office 365 Roadmap last week…

| Feature ID |

App / Service

|

Title |

Description |

Status

|

Added

|

Estimated Release

|

More Info |

| 43717 |

Outlook Web

|

Outlook on the web - option to sign in through outlook.com |

We want to simplify how Office 365 users sign in to Outlook on the web. People with an Office 365 account that use Outlook on the web can now sign-in to their work/school accounts using https://www.outlook.com. Outlook will redirect to the org's sign in page and populate the email address and follow the org's current sign in process. |

In development

|

11/06/2018

|

November CY2018

|

n / a |

| 43758 |

Teams

|

Support for Oracle SBCs in Direct Routing |

Direct Routing allows customers to connect their voice trunks to Office 365. This feature requires certified Session Border controllers. Oracle SBCs are in the process of being certified and we expect the first SBC to be certified this quarter, Q4-CY19. |

In development

|

11/07/2018

|

December CY2018

|

Session Border Controllers certified for Skype for Business |

| 43751 |

Bookings

|

Microsoft Bookings - new service hours experience |

New and more options to customize your business service hours in Microsoft Bookings, including seasonal availability. You can create multiple rules to better customize your bookable hours. |

In development

|

11/07/2018

|

November CY2018

|

n / a |

| 43774 |

SharePoint

|

SharePoint pages: custom thumbnails and descriptions |

You will now be able to choose both a new thumbnail and page description within page details: Previously, modern pages would automatically select the first image and generate a description for a page to use in search, highlighted content, and SharePoint News. Now you can customize these components to further manage how they appear in various places to your viewers. |

In development

|

11/08/2018

|

November CY2018

|

Build your modern intranet with SharePoint in Office 365 |

| 43777 |

SharePoint

|

SharePoint web part: personalized web parts |

Give a personalized experience to your site and page visitors – so they see the content that is theirs, and meant for them to experience. When using personalized web parts, people will see their recent sites, their recent documents and news tailored for them. You can personalize any page or news article. When you add a personalized web part to the page, it is aware of who is signed in and gives them a unique, relevant experience to the content and information you are promoting to them. |

In development

|

11/08/2018

|

November CY2018

|

Build your modern intranet with SharePoint in Office 365 |

| 43778 |

SharePoint

|

SharePoint web part: YouTube embeds |

A picture is worth a thousand words. A YouTube video can be worth a million. And it's best if it's not just a link out to the Web, but rather a playable video that sits right beside the additional context you want to surround it. You can add a video from YouTube by pasting the share link provided by YouTube. We've added a YouTube icon to make this more apparent in the toolbox. |

In development

|

11/08/2018

|

November CY2018

|

Build your modern intranet with SharePoint in Office 365 |

| 43779 |

SharePoint

|

SharePoint web part: use lists with quick Charts web part |

The Quick Charts web part now allows you to select a list on the current site to use for your data to be visualized, instead of manually entering the data. |

In development

|

11/08/2018

|

November CY2018

|

Build your modern intranet with SharePoint in Office 365 |

| 43780 |

SharePoint

|

SharePoint web part: code snippet |

The Code Snippet web part allows authors to share code snippets, with correct syntax, for many commonly supported development languages, on their modern pages. |

In development

|

11/08/2018

|

November CY2018

|

Build your modern intranet with SharePoint in Office 365 |

| 43781 |

SharePoint

|

SharePoint sites: updated "Change the look" panel |

This "Change the look" panel update includes a new tab interface for the various site settings: theme, header, navigation and footer - all accessible inline as a site owner in a right fly-out edit pane. |

In development

|

11/08/2018

|

November CY2018

|

Build your modern intranet with SharePoint in Office 365 |

| 43782 |

Office 365

Exchange

|

Google G Suite to Office 365 migration |

We've heard the feedback and we're excited to announce the new G Suite migration experience which will allow you to directly migrate email, calendar and contacts from Google G Suite to Office 365! Our highly secure solution ensures your data is directly migrated to Office 365, with no resting points along the way. We're also adding support for migrating mailbox in batches. |

In development

|

11/08/2018

|

Q2 CY2019

|

n / a |

UPCOMING EVENTS

Teams Tuesdays

When: Tuesday, November 13, 2018 from 10am – 11am PT | Whether you're managing a new project or starting your own business, it helps to have a team behind you to brainstorm ideas, tackle the work together, and have some fun along the way. Now you can use Microsoft Teams to do just that. Join our team LIVE every Tuesday from 10-11am PDT to learn how you can get started with the free version of Teams. In this hour, we'll walk you through the product and key features, share best practices for getting started, and answer any questions you may have. We look forward to meeting you!

Getting Started with Microsoft Teams

When: Tuesday, November 13, 2018 at 10am PT | This 60-minute session introduces you to the key activities needed to get started with Microsoft Teams today. From setting your profile, to running a meeting, users will leave this session with the foundation needed to use Teams with confidence. Check here for sessions in different time zones and other dates.

Upgrade 101: Understanding your upgrade from Skype for Business to Microsoft Teams

When: Tuesday, November 20, 2018 at 10am PT | Looking to better understand the upgrade journey from Skype for Business to Microsoft Teams? Join us for this 60-minute session to get familiar with our upgrade paths (Upgrade Basic and Upgrade Pro), our resources, and a walkthrough of our upgrade success framework to help you navigate through your journey. Check here for sessions in different time zones and other dates.

Strengthen Your Data Modeling Skills with Power BI

When: Tuesday, November 20, 2018 at 11am PT | Register for this webinar to take your Power BI modeling skills to the next level. Learn about the Power BI in-memory analytics engine, strategies for creating and managing data relationships, and how to use Data Analysis Expressions (DAX) filter context. Find out how to master any modeling challenge with Power BI or Azure Analysis Services. You'll also learn how to: (1) Load, store, and analyze data in Power BI, (2) Define business rules and calculations using DAX, and (3) Use DirectQuery to connect directly to data sources.

Make the switch from Skype for Business to Microsoft Teams: End User Guidance

When: Wednesday, November 21, 2018 at 1pm PT | Designed specifically for Skype for Business end users, this course offers everything you need to help make the transition to Microsoft Teams. We'll focus on the core communication capabilities you use today, chat and meetings, as well as provide an orientation to additional collaboration functionality Teams has to offer. Check here for sessions in different time zones and other dates.

[Rescheduled] Webinar: What's new in Windows 10, version 1809 for IT pros

When: Wednesday, November 28, 2018 at 10am PT | As an IT professional, you have a lot on your plate. You're managing corporate- and user-owned devices, deploying feature and quality updates, identifying and resolving compatibility issues, and more. Windows 10, version 1809 includes features that can help you simplify upgrade planning, identify and resolve compatibility blockers, monitor update compliance, and remediate end user impacting issues so you can get your job done with less frustration. Join this webinar to: (1) Discover how you can move away from traditional, image-based deployment with Windows Autopilot, (2) Learn about the steps you can take right away to better protect user identities, devices, and information, and (3) Find out how you can create more secure, scalable, and reliable desktop virtualization solutions that integrate with your modern desktop. | Resource: What's new in Windows 10, version 1809 for IT pros

[Rescheduled] Ask Microsoft Anything (AMA): Windows 10, version 1809 for IT Pros

When: Thursday, December 13, 2018 at 9am PT | If you're in IT, make sure you join us Thursday, December 13th to get up to speed and get your questions answered about Windows 10, version 1809. An AMA is a live online event similar to a "YamJam" on Yammer or an "Ask Me Anything" on Reddit. We've assembled a group of engineers and product managers from the Windows, Window Defender ATP, System Center Configuration Manager, Microsoft Intune, Microsoft Edge, and Microsoft 365 teams—and we'll be answering your questions live during what promises to be an exciting and informative "Ask Microsoft Anything" (AMA) event. Join in the Windows 10 AMA space, or add it to your calendar. We look forward to seeing you there!

BLOG ROUNDUP

What's new in Microsoft Teams – November round up

Microsoft Teams, the hub for teamwork in Office 365, continues to bring new capabilities to empower teams and organizations to achieve more. With new features like Drive Mode and Quiet Hours, users can enjoy enhanced experiences while commuting or engaging in non-work-related activities. Additionally, new functionality for admins such as creating teams based on dynamic group membership, reduces the overhead of manually updated team membership. There were also new third-party app integrations with Confluence Cloud, Meekan, Jira Cloud and more! Improved presence for Coexistence Mode brings a more accurate display of status to others in organizations during coexistence as organizations transition from Skype for Business to Teams.

Related:

ICYDK Microsoft Teams Migration with the SharePoint Migration Tool

Designed to simplify your journey to the cloud through a free, simple, and fast solution, the SharePoint Migration Tools enables you to migrate content from on-premises SharePoint sites and shares to SharePoint, OneDrive, and Microsoft Teams in Office 365. Using the SharePoint Migration Tool, you can quickly bring your most important content to Microsoft Teams to create a more open, digital environment. Since each team in Microsoft Teams has a team site in SharePoint Online, the SharePoint Migration Tool provides a quick and simple solution to bringing your files to Microsoft Teams in Office 365.

OneDrive Message Center Updates October 16 - 31, 2018

The team has been hard at work and we have some great new updates coming in November that you might be interested in! This month, we will be releasing the next version of the OneDrive mobile apps (on both iOS and Android) with support for new Mobile Capture scenarios and intelligent meeting note sharing. The Word, Excel and PowerPoint mobile apps will ship the common sharing dialog as well. On the web, we are releasing the new Recent and the new Manage Access experiences. Finally, we're making some updates to the Access Request Outlook Actionable Messages. All of these features will start arriving in your organization in November. We are also announcing deprecation plans for the OneDrive application on Mac OS X Yosemite (10.10) & El Capitan (10.11).

Solutions in Microsoft Flow

In a recent announcement, Microsoft shared news about a new Application Lifecycle Management (ALM) capability for PowerApps and Microsoft Flow. This new capability is built upon the Common Data Service solution system. In this blog post, we will share more details about how Microsoft Flow makers can use Solutions to bundle related flows (and apps) within a single deployable unit. | Related: Solutions in Power Apps | Introducing Mobile Application Management (MAM) support for Microsoft Flow Mobile Application

Collaborate with others and keep track of to-dos with new AI features in Word

Focus is a simple but powerful thing. When you're in your flow, your creativity takes over, and your work is effortless. When you're faced with distractions and interruptions, progress is slow and painful. And nowhere is that truer than when writing. Word has long been the standard for creating professional-quality documents. Technologies like Editor—Word's AI-powered writing assistant—make it an indispensable tool for the written word. But at some point in the writing process, you'll need some information you don't have at your fingertips, even with the best tools. When this happens, you likely do what research tells us many Word users do: leave a placeholder in your document and come back to it later to stay in your flow. We're starting to roll out new capabilities to Word that help users create and fill in these placeholders without leaving the flow of their work. For example, type TODO: finish this section or <<insert closing here>> and Word recognizes and tracks them as to-dos. When you come back to the document, you'll see a list of your remaining to-dos, and you can click each one to navigate back to the right spot. Over time, Office will use AI to help fill in many of these placeholders. In the next few months, Word will use Microsoft Search to suggest content for a to-do like <<insert chart of quarterly sales figures>>. You will be able to pick from the results and insert content from another document with a single click. These capabilities are available today for Word on the Mac for Office Insiders (Fast) as a preview. We'll roll these features out to all Office 365 subscribers soon for Word for Windows, the Mac, and the web.

NOTEWORTHY

Plan, deliver, and adopt Outlook for iOS and Android in your organization

Published: November 5, 2018 | Use the resources in the Outlook Mobile Customer Adoption Pack to make the most of Microsoft Outlook for iOS and Android for your organization. This adoption pack contains a wide range of customizable onboarding templates, flyers, and posters, that IT Pros, administrators, and trainers can use to roll out and drive the adoption of Outlook mobile to end users in their organization. Included in this pack: (1) Customizable onboarding email templates with links to demo video and installation instructions, and (2) Posters and flyers to drive buzz and excitement.

Announcing data residency in Canada for Microsoft Stream

Microsoft Stream is a global service and already widely in use by customers around the world. In our ongoing commitment to support local governments and other needs, we continue to enable more regions in which customers can store their Stream data. Towards this, we are excited to announce the option to store Microsoft Stream data in the Canada region. Starting September 24, 2018, any new Canada-based tenants automatically benefited from this new Stream region. At this time, content for customers in Canada already using Microsoft Stream prior to September 24, 2018 will remain in the region where it was originally stored. To determine the region in which your Stream data is stored, click on the "?" in your Stream portal and then click on "About Microsoft Stream."

Office 365 for Windows Desktop - October 2018 release details

On October 30th, 2018, Microsoft released Office for Windows Desktop version 1810 (Build 11001.20074). Our Office International team translated this update into 44 languages. Here are some of our favorite new features that shipped in this release: (1) Insert animated 3D graphics into your Microsoft Word document. You can see T-Rex giving you the evil eye in your word document, and (2) In PowerPoint you can now change hand-drawn text and shapes into refined diagrams. You can also write over a shape with your pen and use the Ink to Text button to automatically convert your handwritten ink to typed text on the shape. More information and help content on this release can be found in the What's New in Office 365 page.

Microsoft Mechanics: User Communications and Training - Step 8 of Modern Desktop Deployment

Format: Video (8 minutes) | This is step 8 in the desktop deployment process - ensuring that your users are informed, ready and able to benefit from updates to Windows, Office and more. In this step, you'll learn about best practices for communicating to users, phased deployment to move at a measurable pace and pre-built user training for Office 365 via FastTrack's free Productivity Library. | Resource: Modern Desktop Deployment Center

Extended support for SharePoint Server 2010 ends in October 2020

This month marks the beginning of the 24-month countdown before SharePoint Server 2010 reaches end of extended support. It's not too late to start planning an upgrade or migration to the latest version of SharePoint whether your plans are on-premises, in the cloud, or somewhere in between. Mainstream support for SharePoint Server 2010 ends in October 2020. SharePoint Server 2010 has been on extended support since then, which means only security updates are released. On October 13, 2020, Microsoft will completely end support for SharePoint Server 2010. Here's what end of support means: (1) No critical updates where released in 2017 for SharePoint Server 2010 under extended support, (2) No security updates will be developed or released after end of support, and (3) More importantly the operating systems supporting SharePoint Server 2010 are reaching or have reached end of support.

との提携によりリアルタイムの求人データが掲載されます。データは、25,000 以上の世界中の求人サイトや企業の採用ページ、米国国勢調査局、米国労働統計局から 24 時間年中無休で収集、更新されています。皆様が合格された試験や取得された認定資格の対象となっているスキルに応じて、募集中の求人データと職務内容が表示されます。こちらの動画 (英語) で、求人データがどのように掲載されているのかご覧ください。

との提携によりリアルタイムの求人データが掲載されます。データは、25,000 以上の世界中の求人サイトや企業の採用ページ、米国国勢調査局、米国労働統計局から 24 時間年中無休で収集、更新されています。皆様が合格された試験や取得された認定資格の対象となっているスキルに応じて、募集中の求人データと職務内容が表示されます。こちらの動画 (英語) で、求人データがどのように掲載されているのかご覧ください。

お申込みはこちら

お申込みはこちら

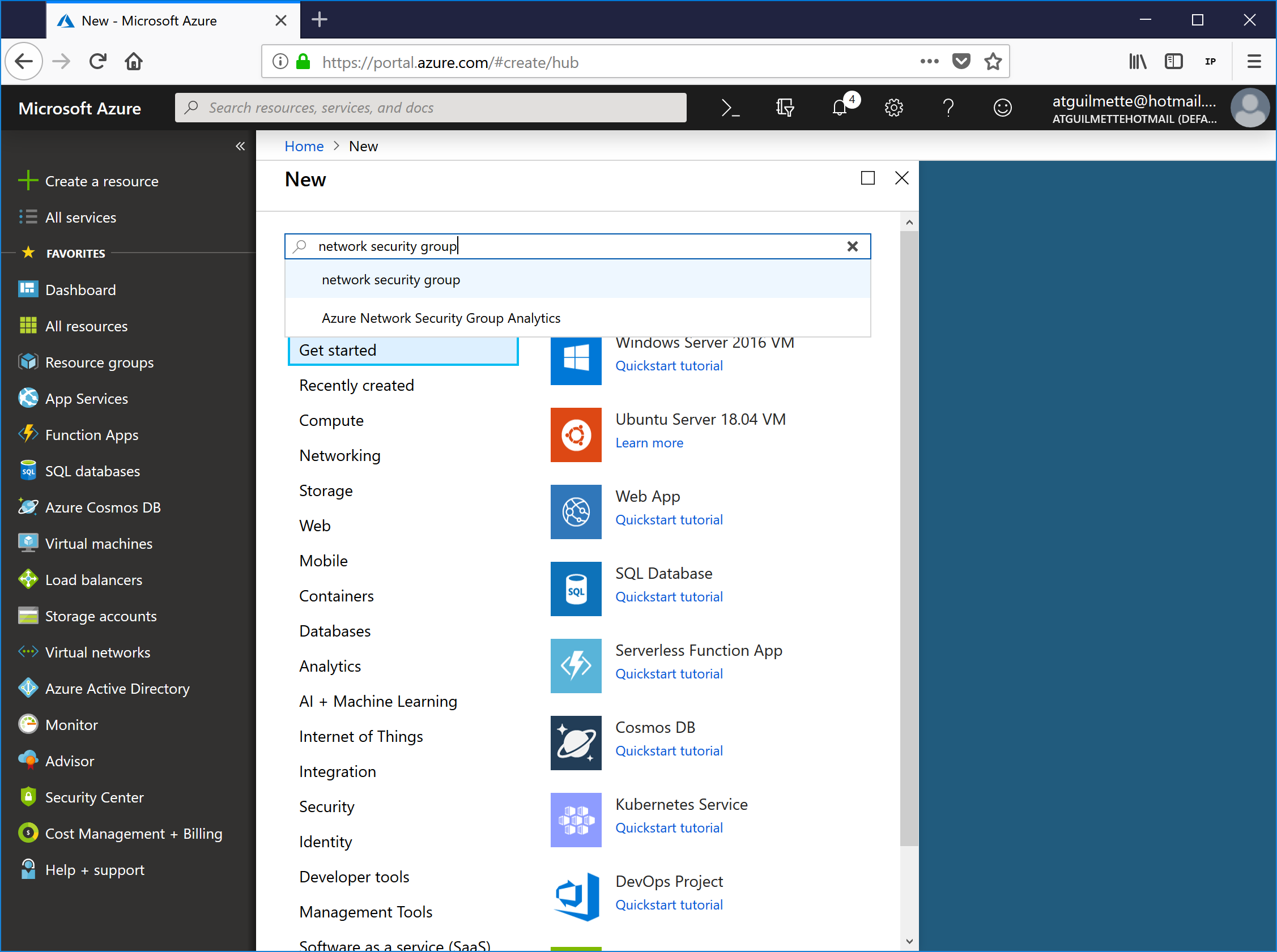

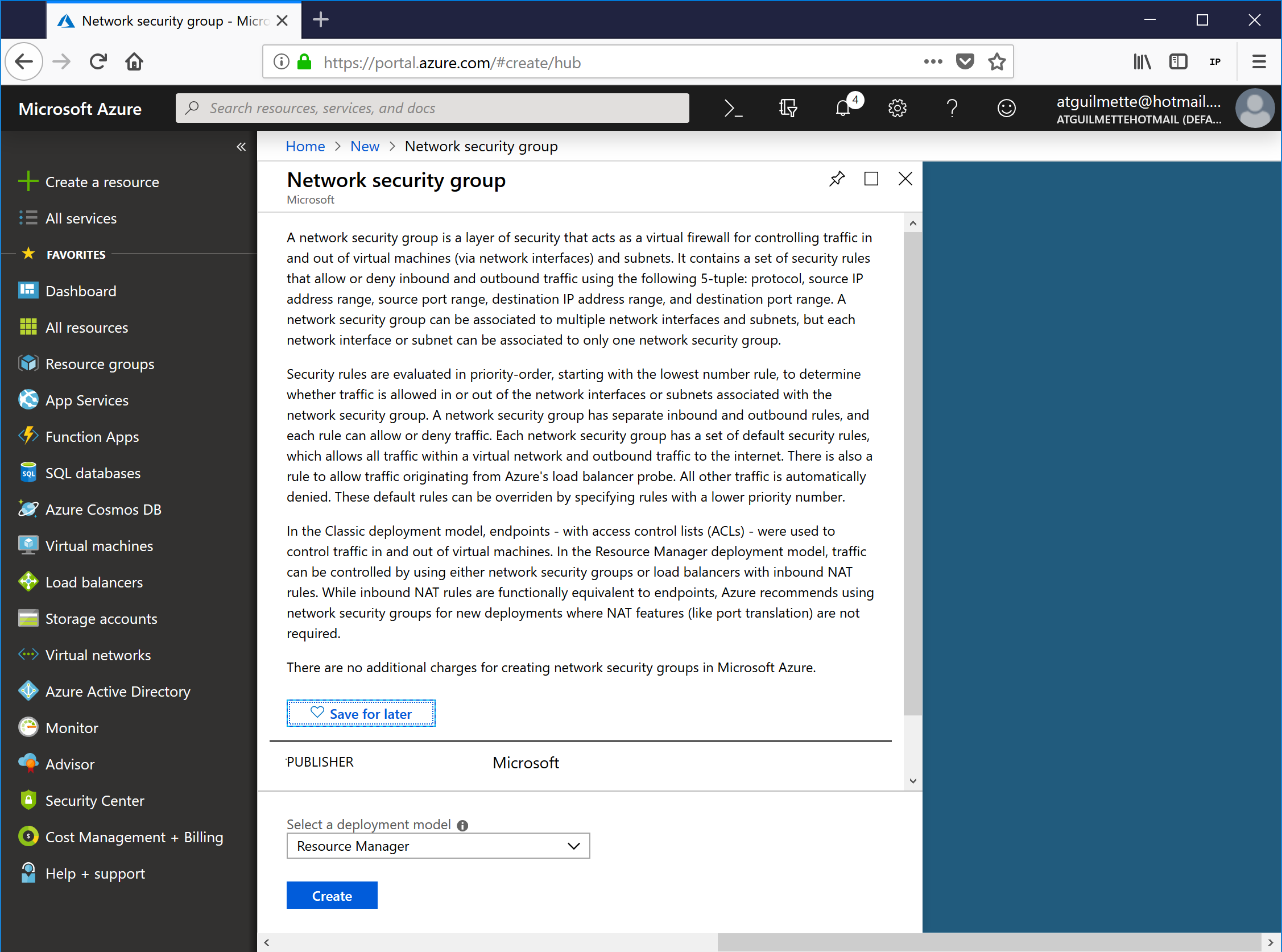

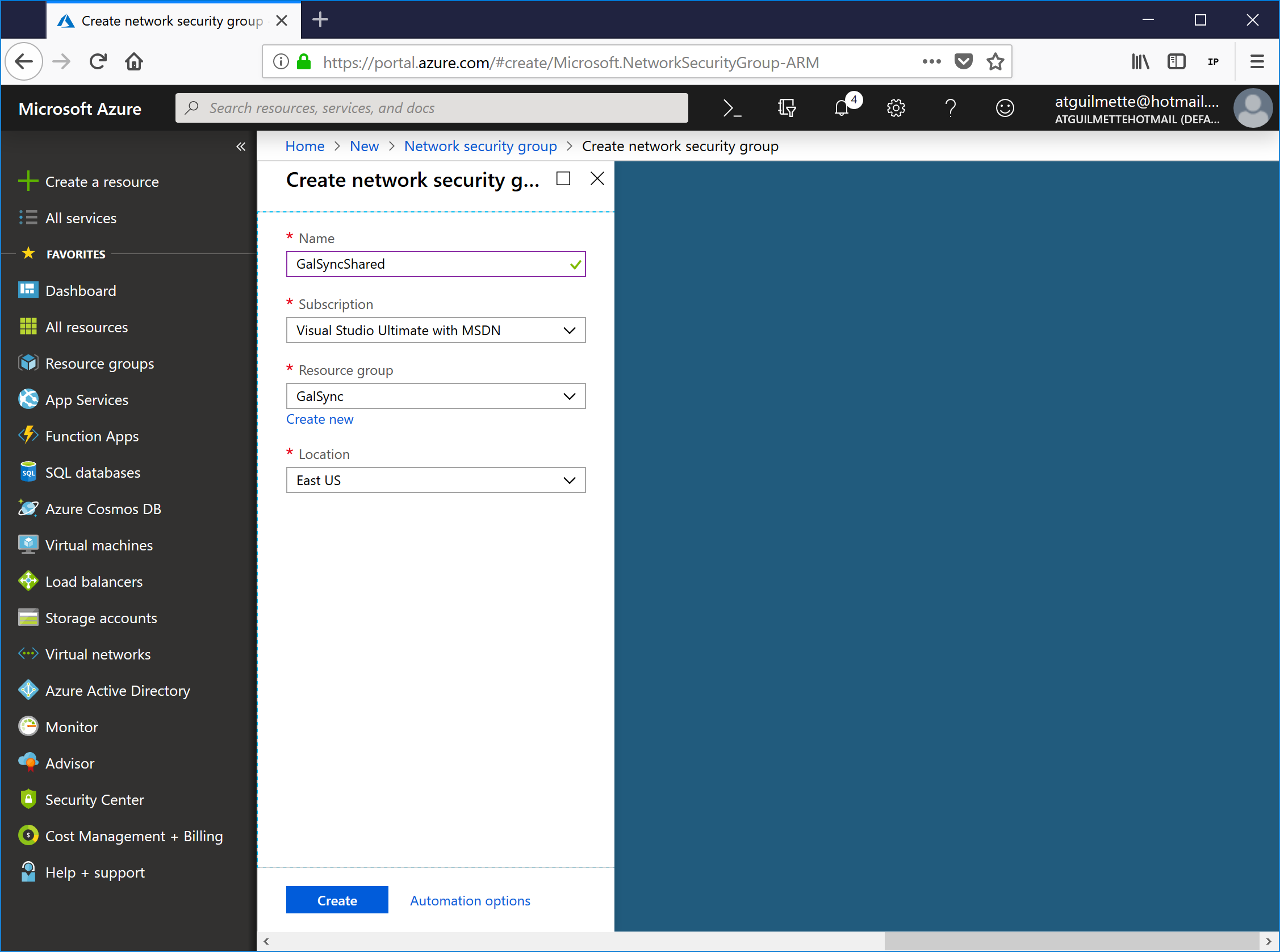

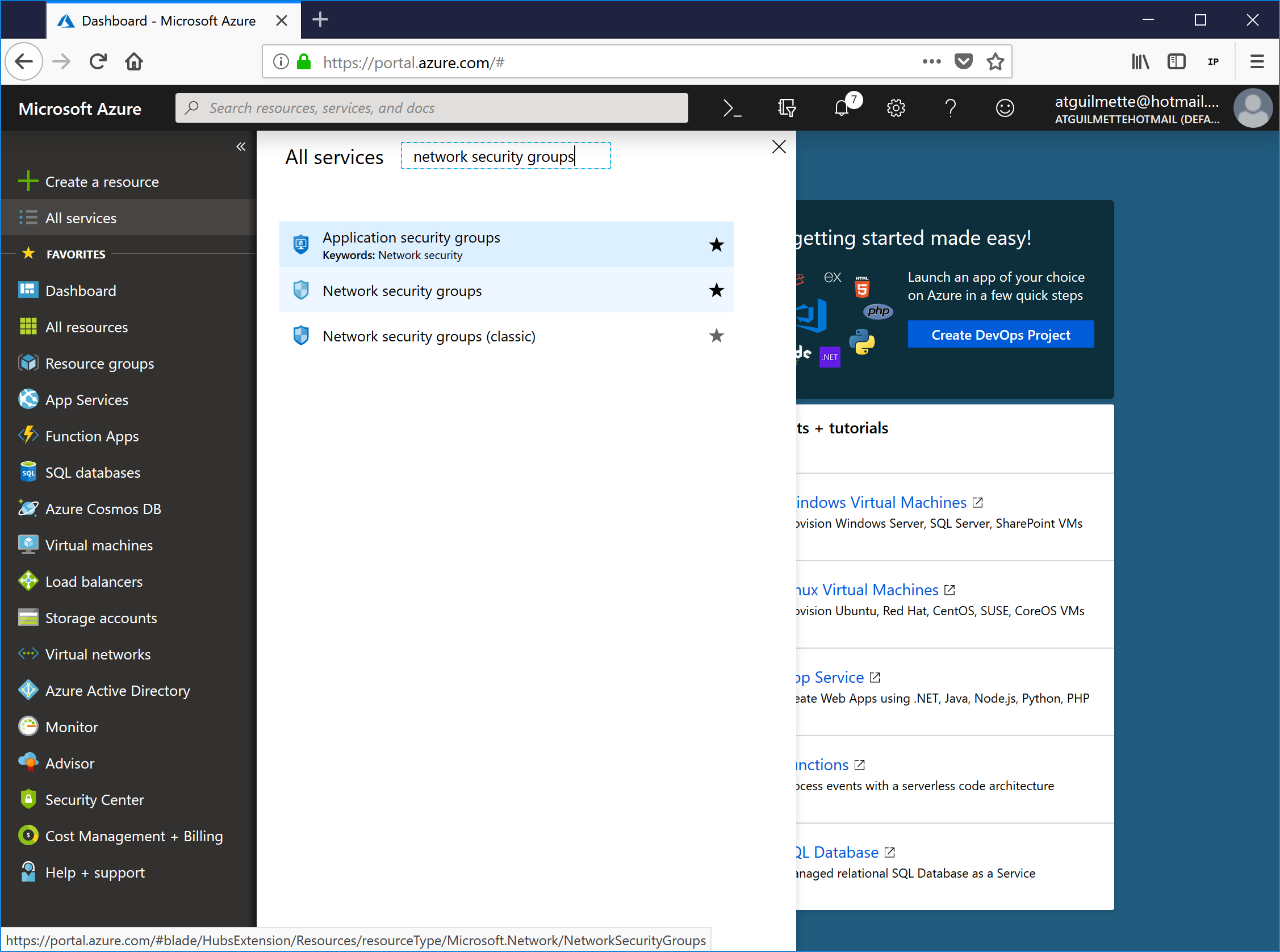

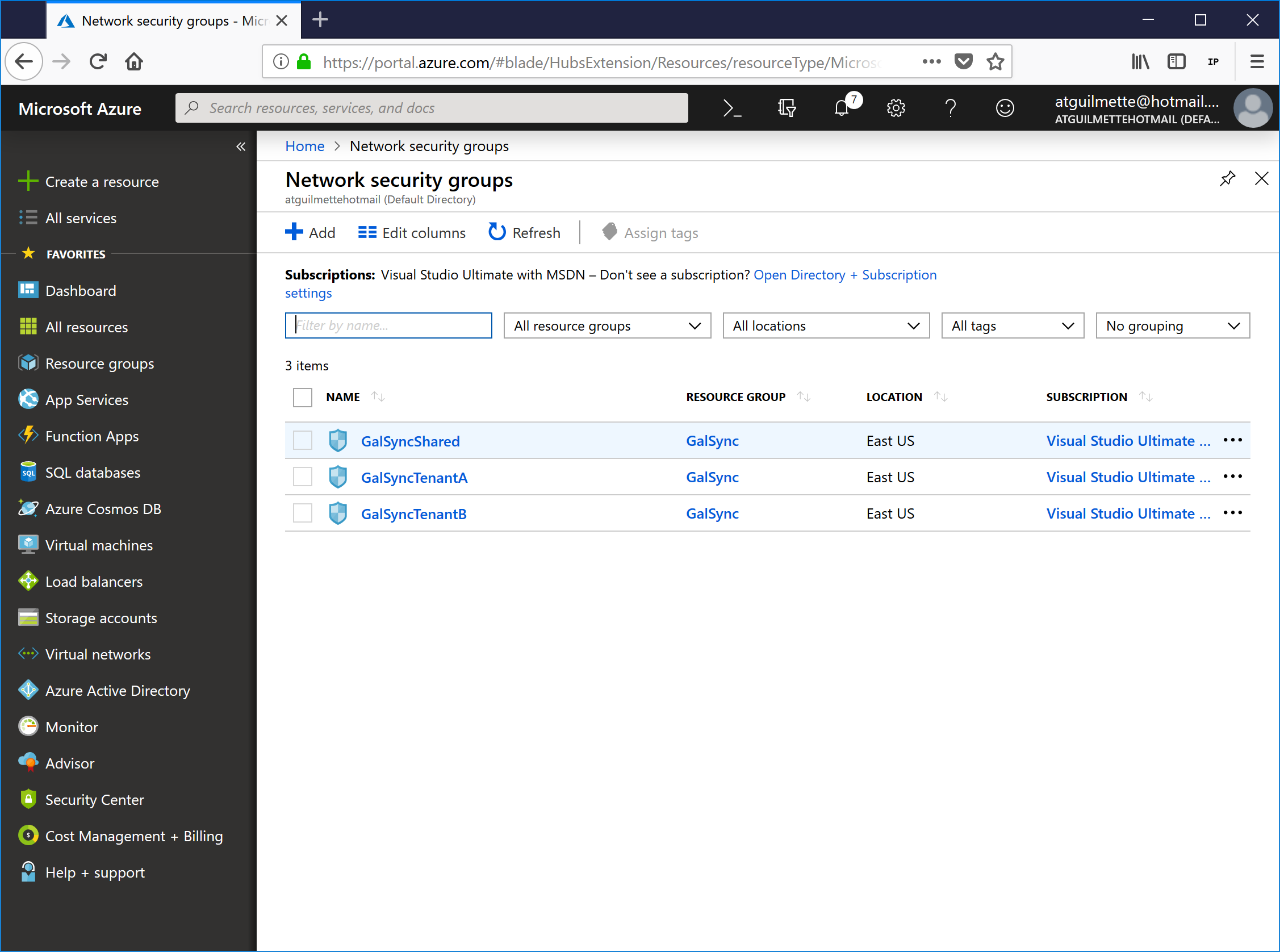

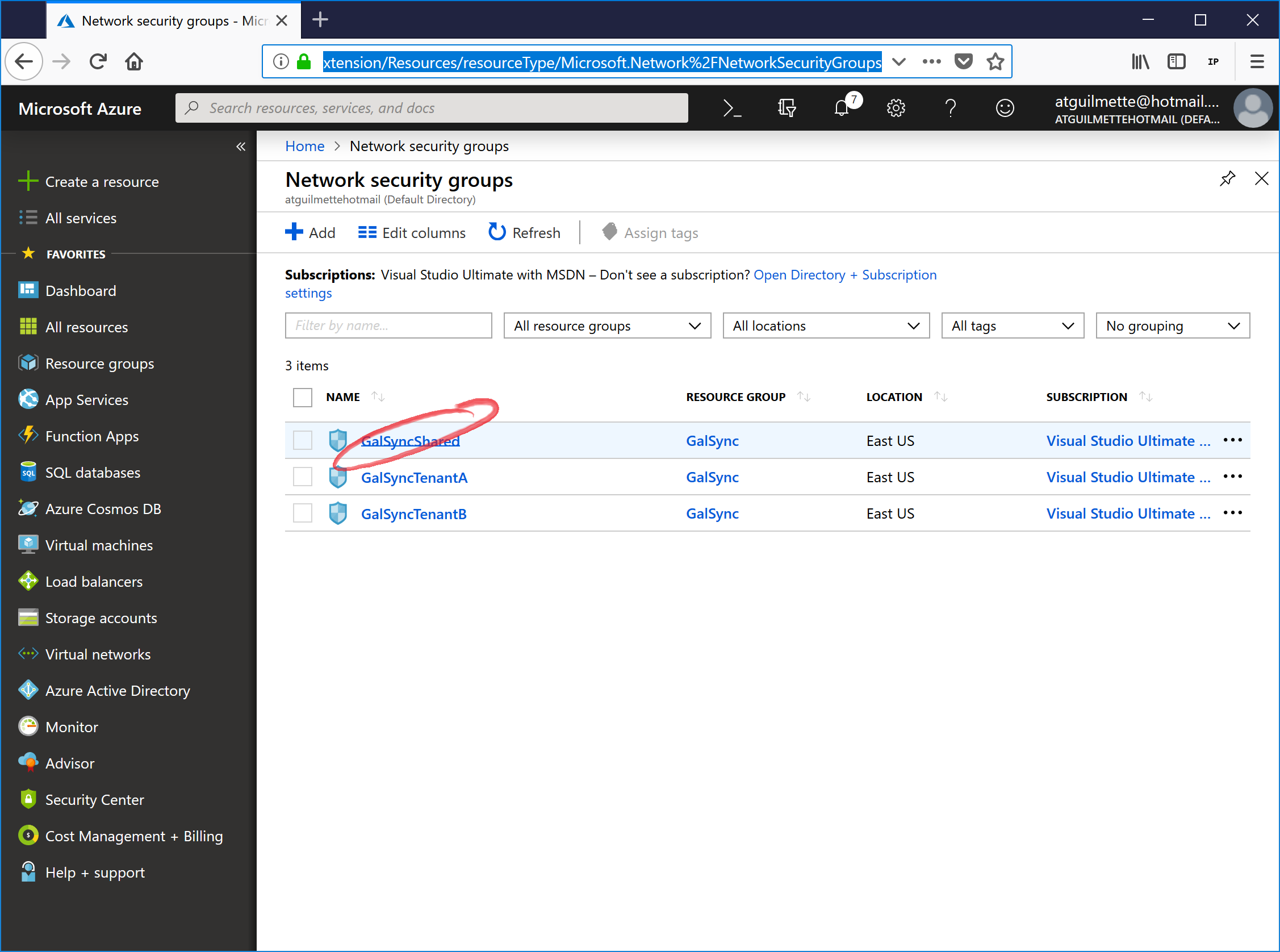

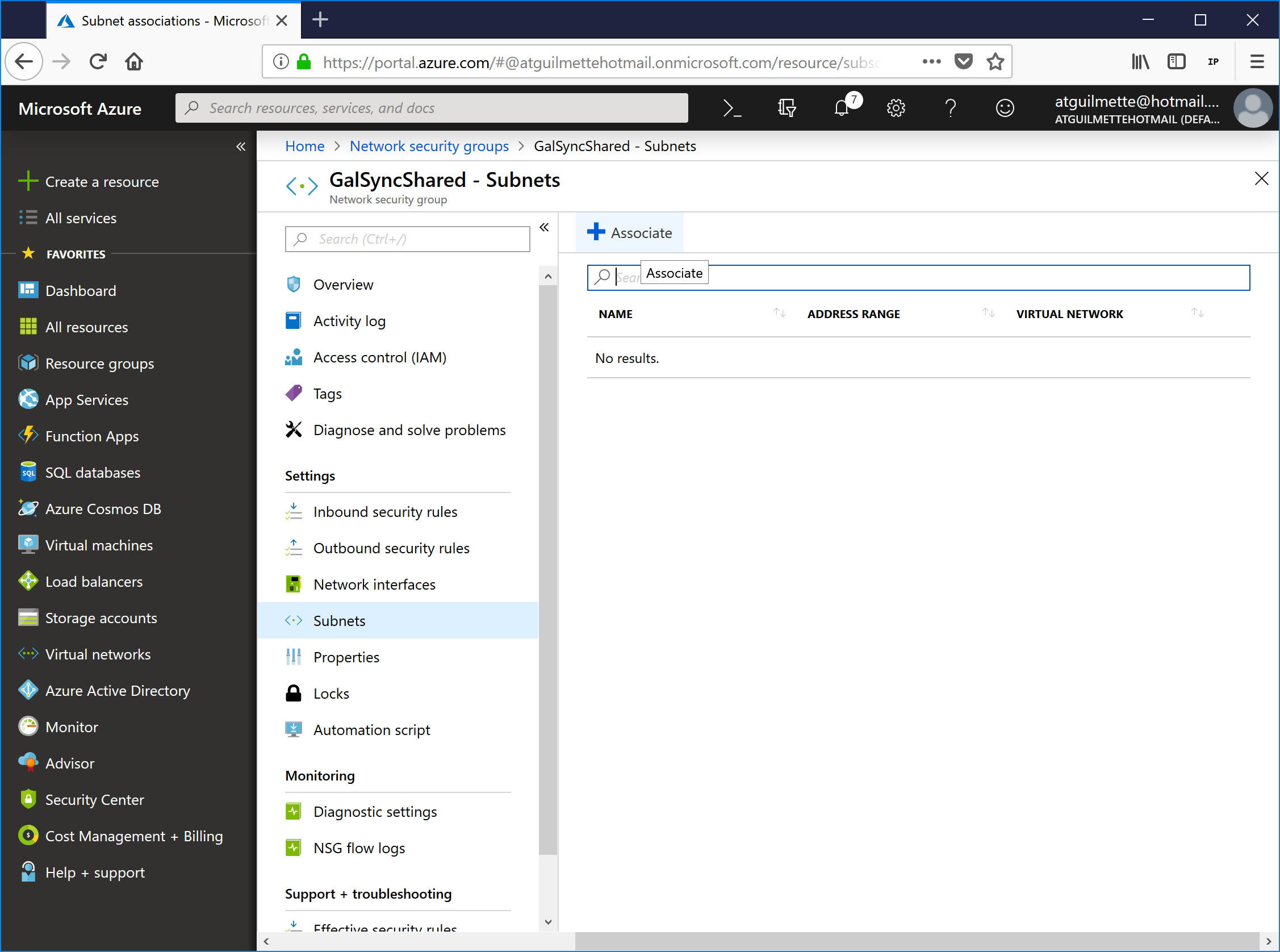

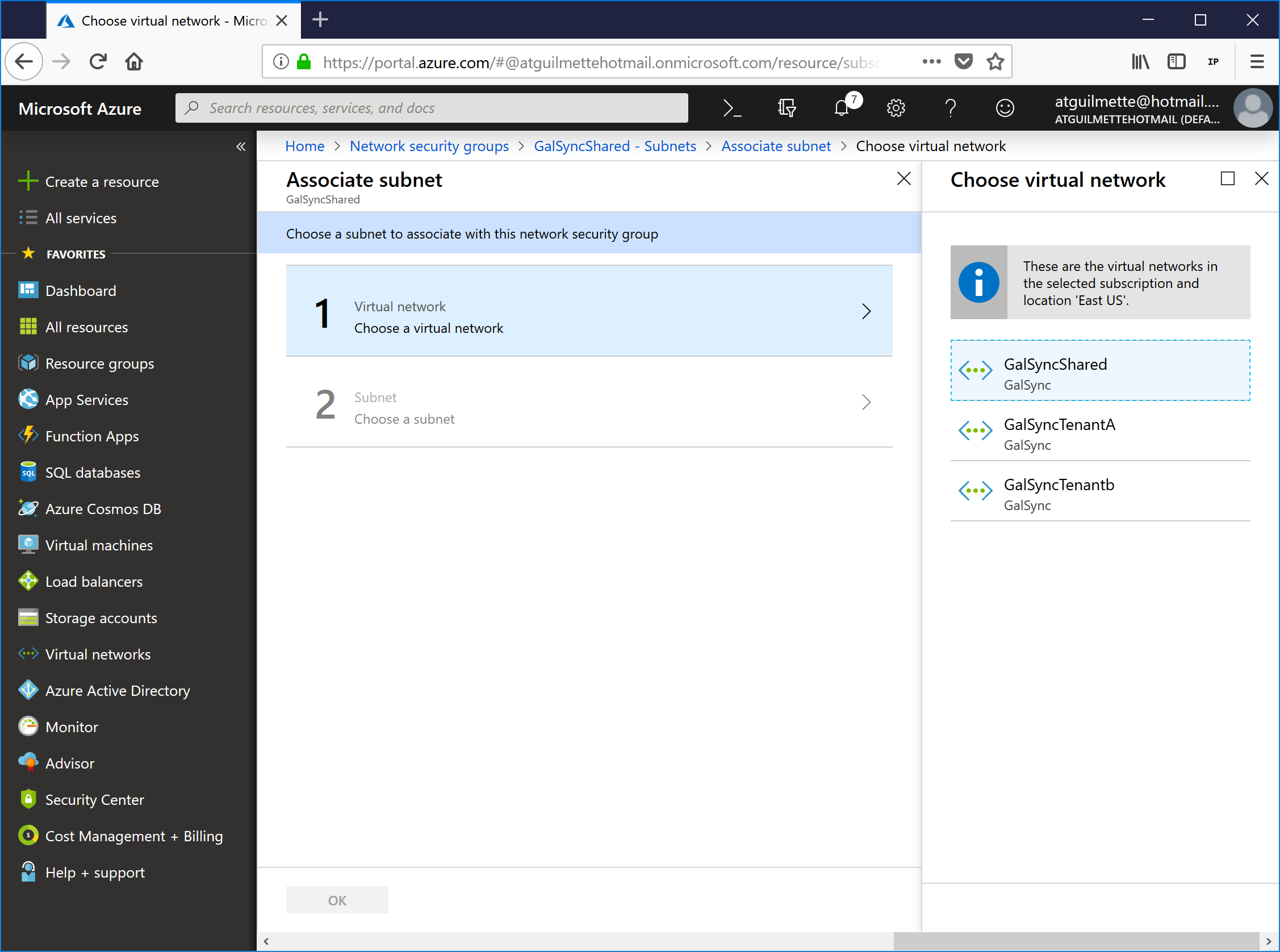

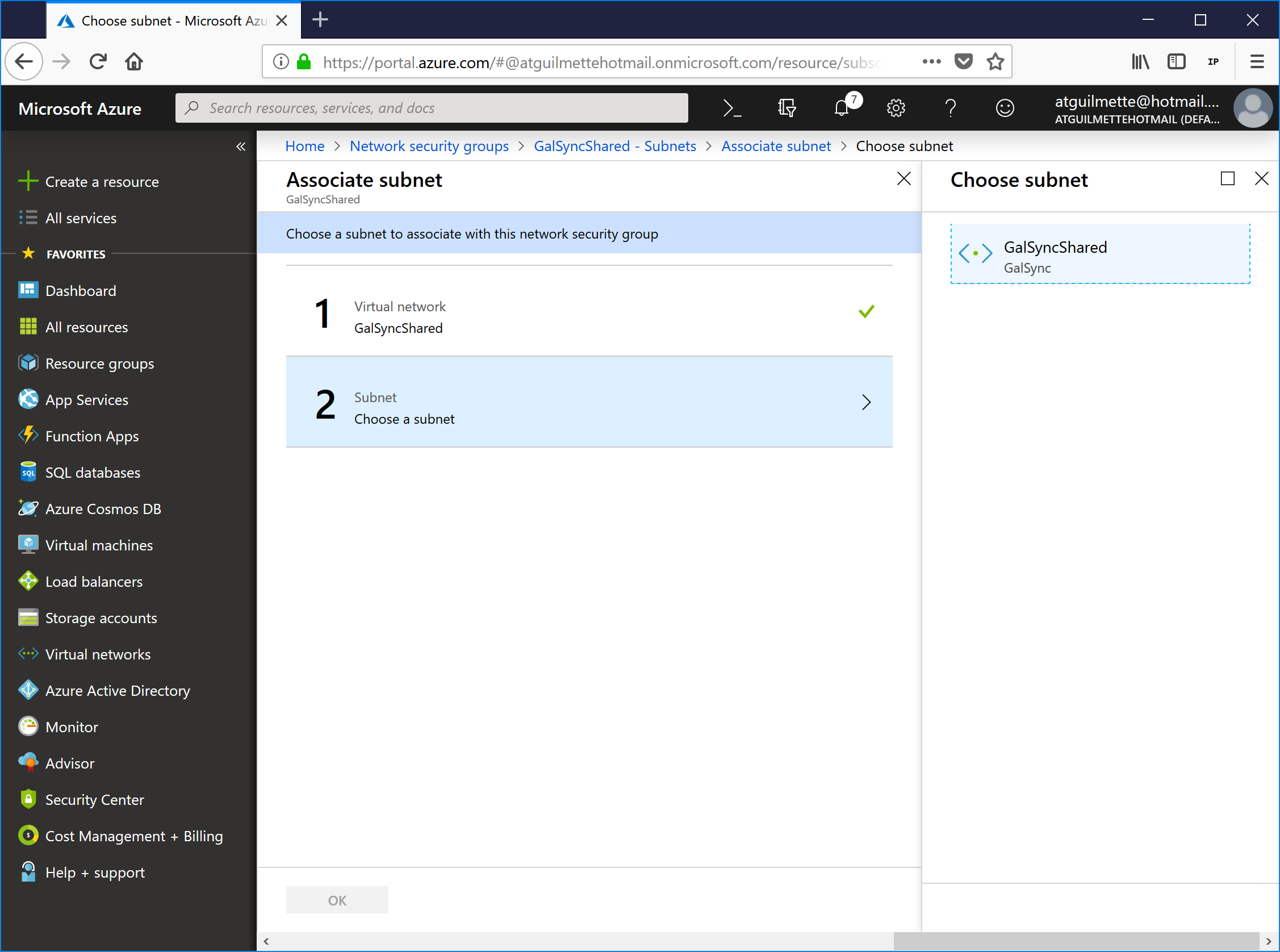

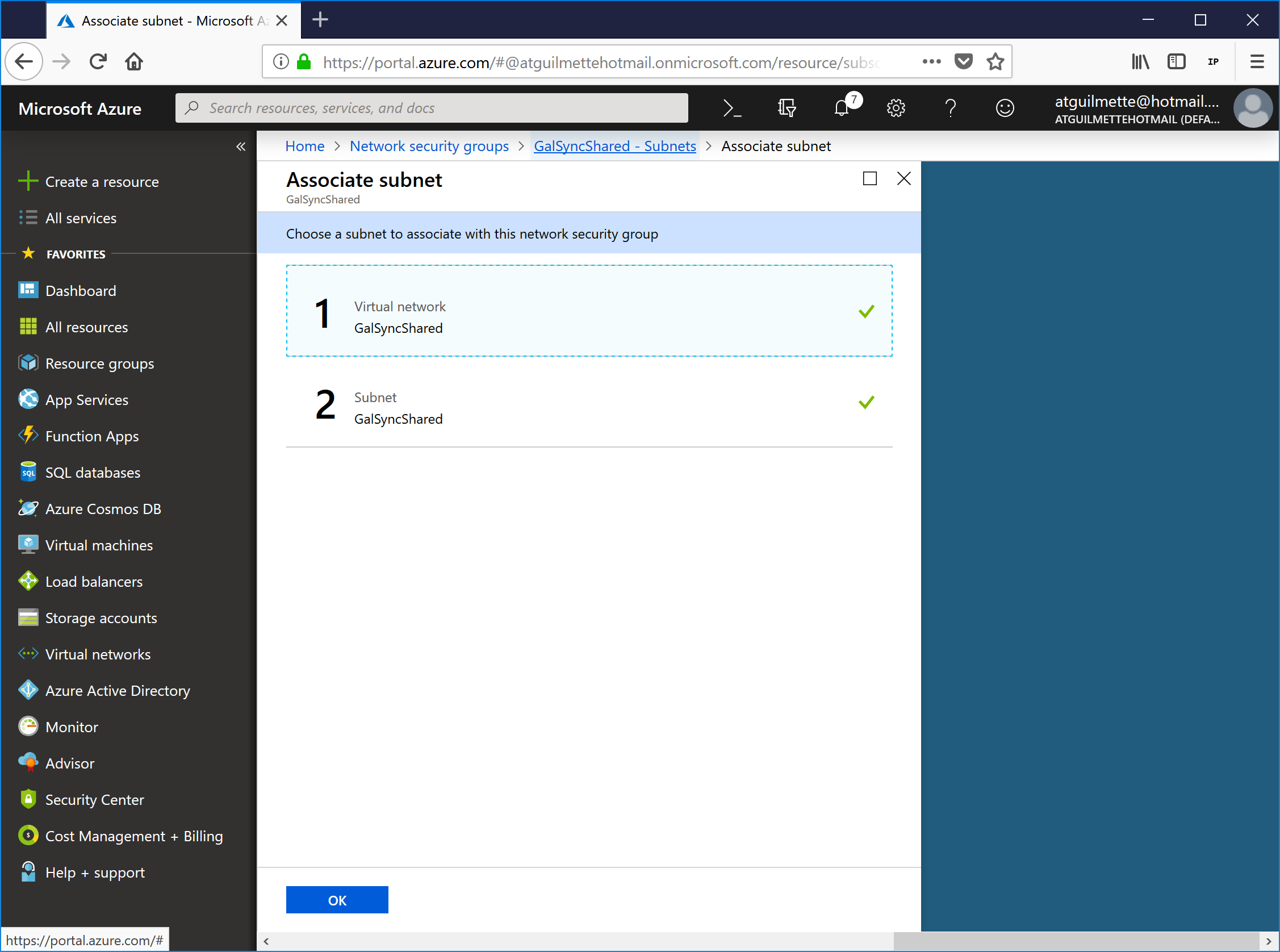

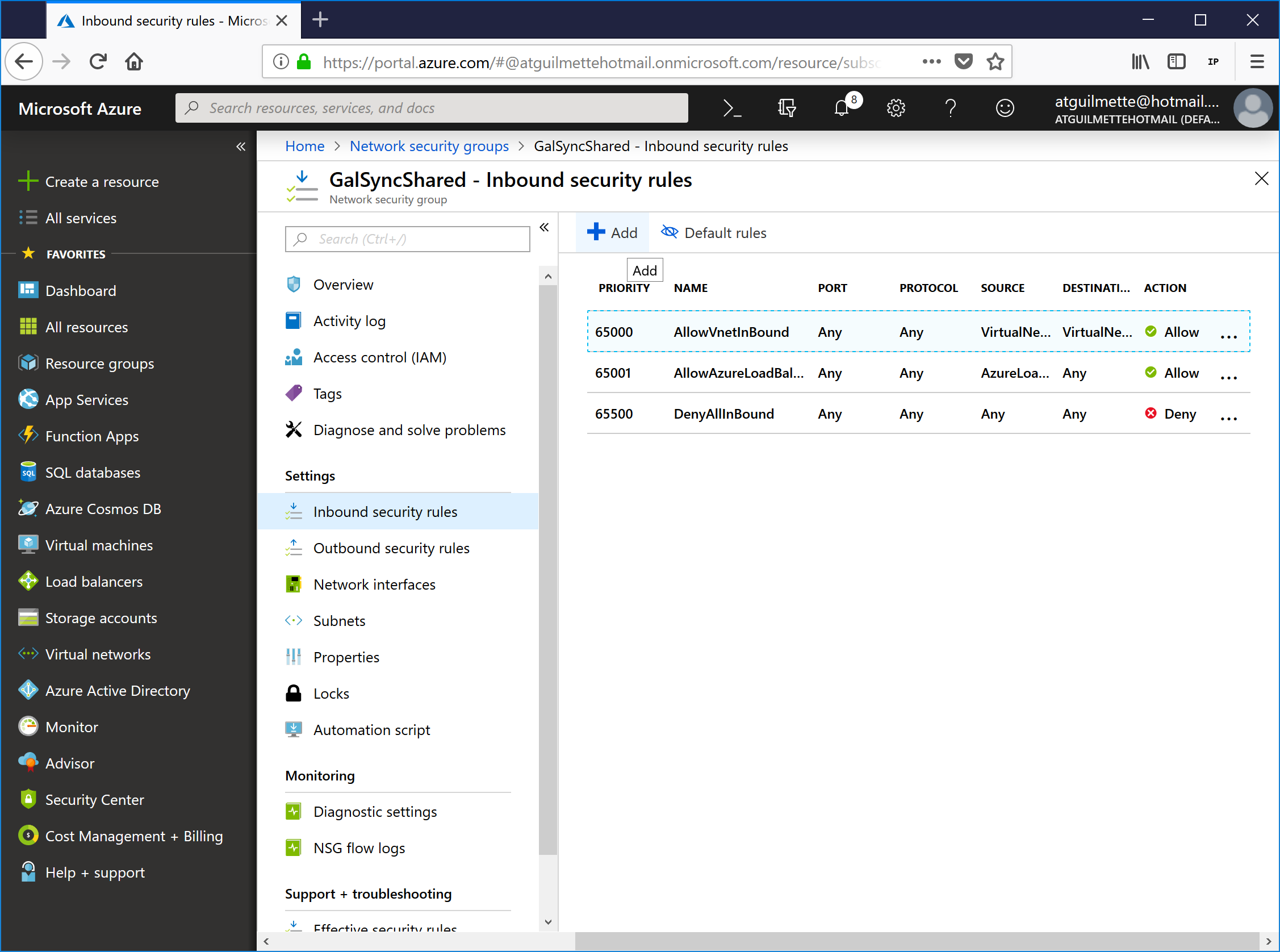

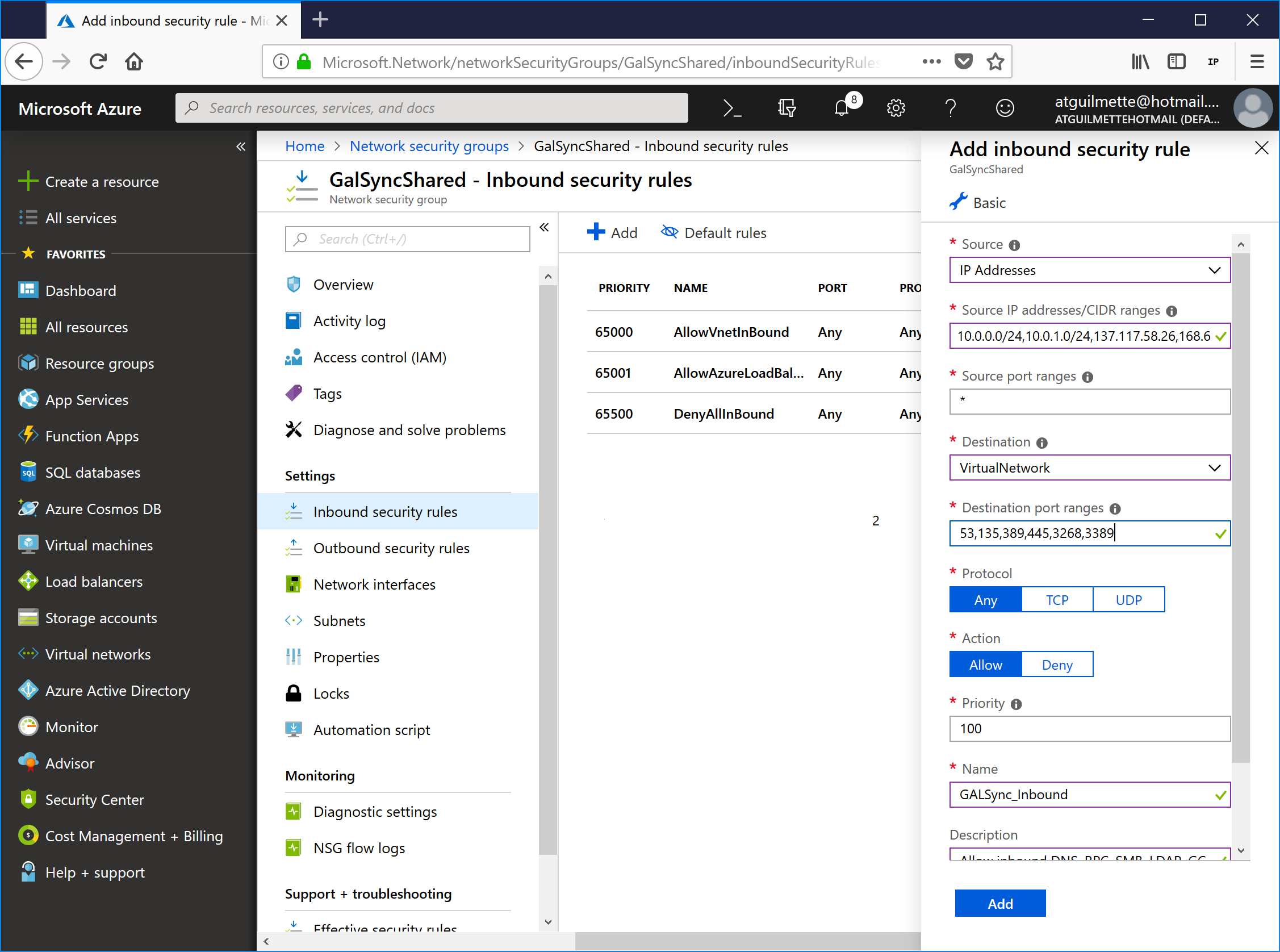

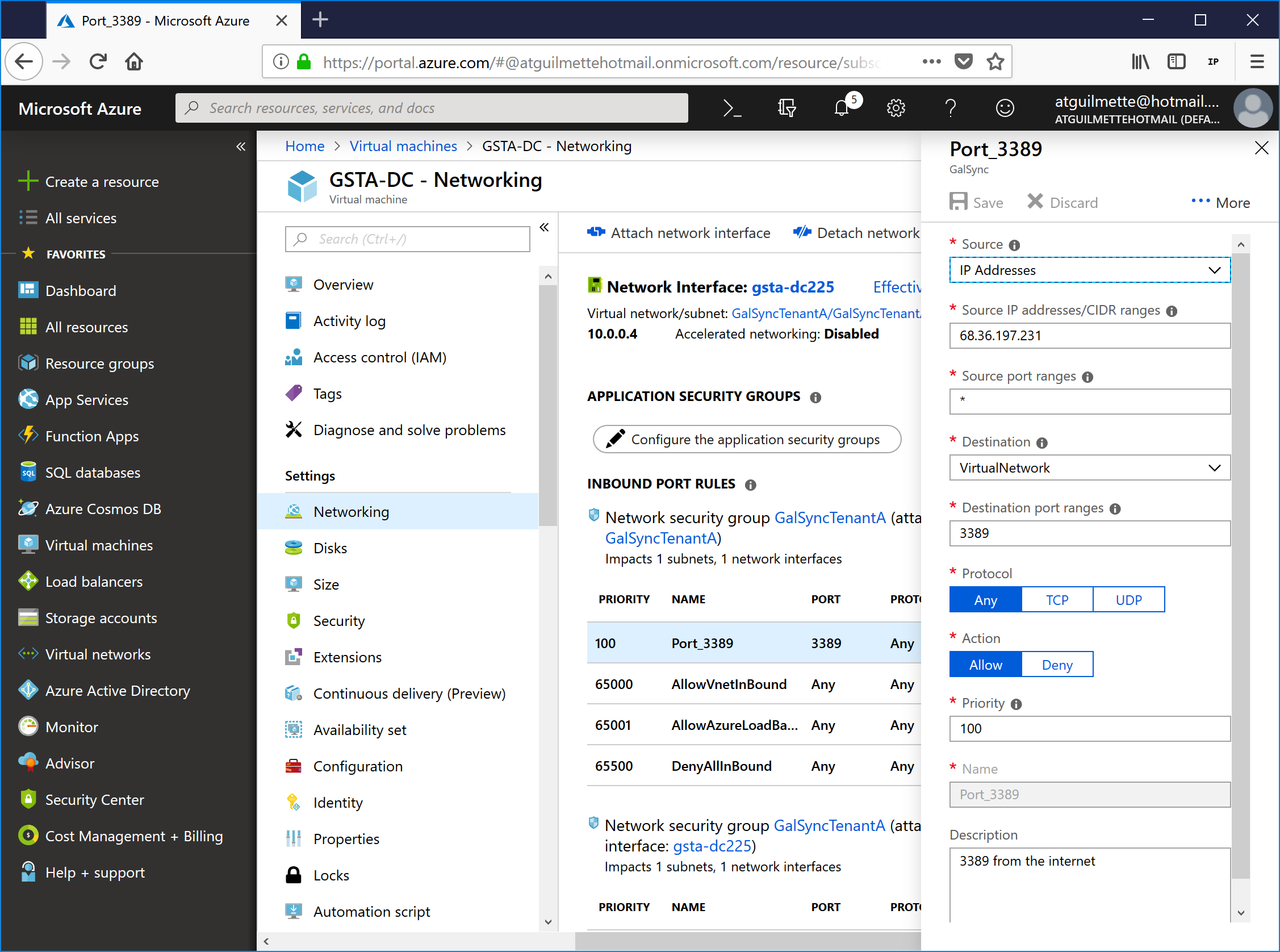

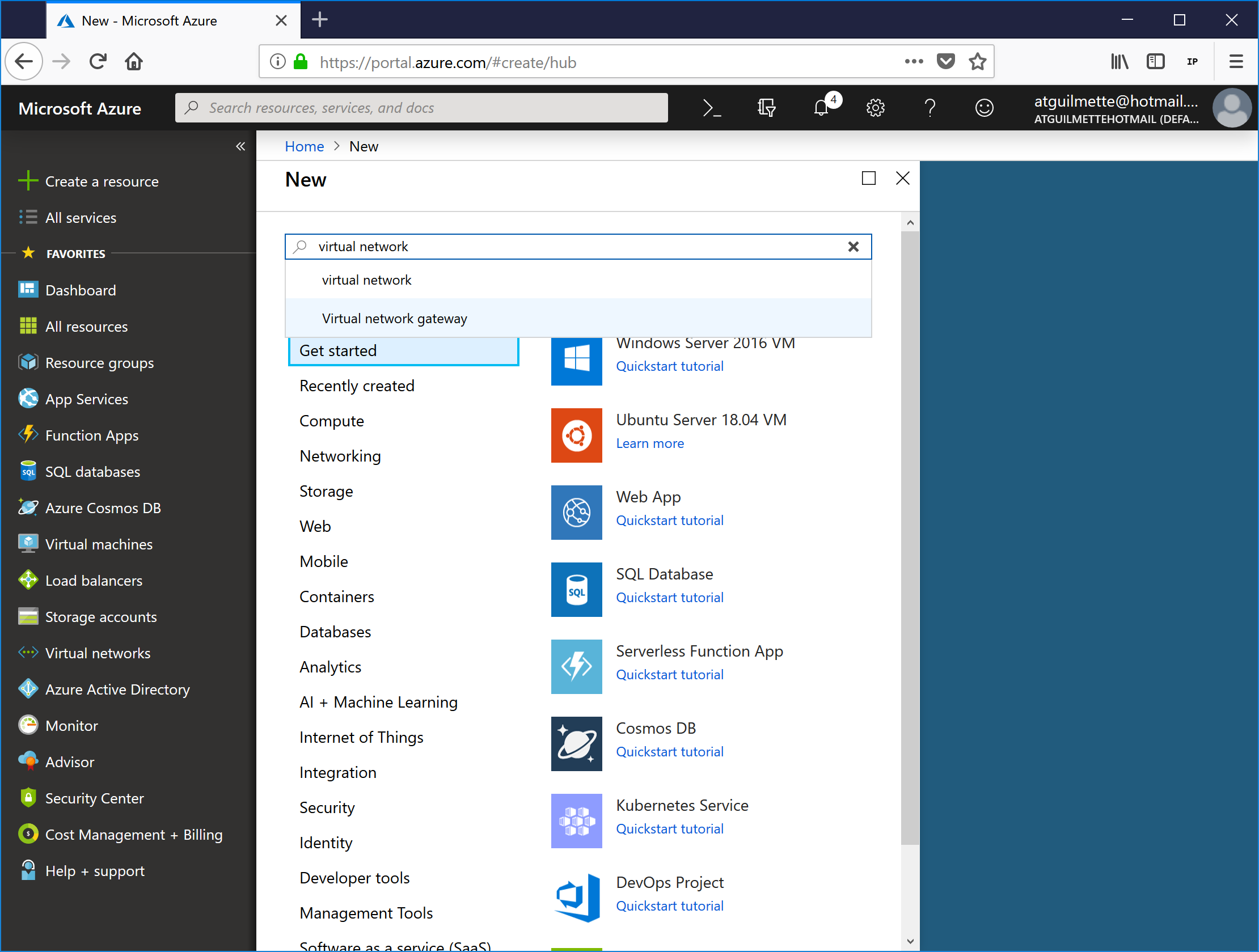

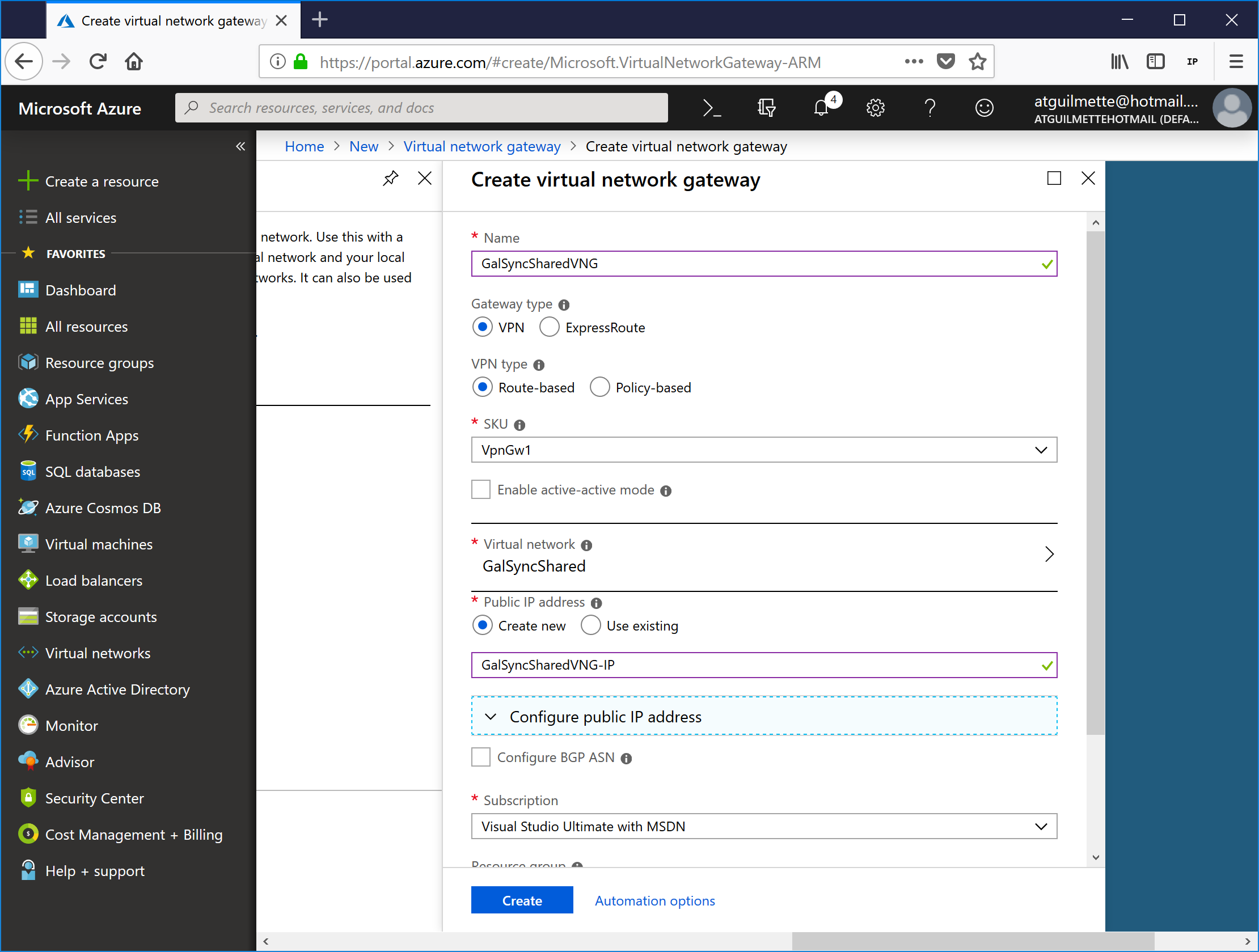

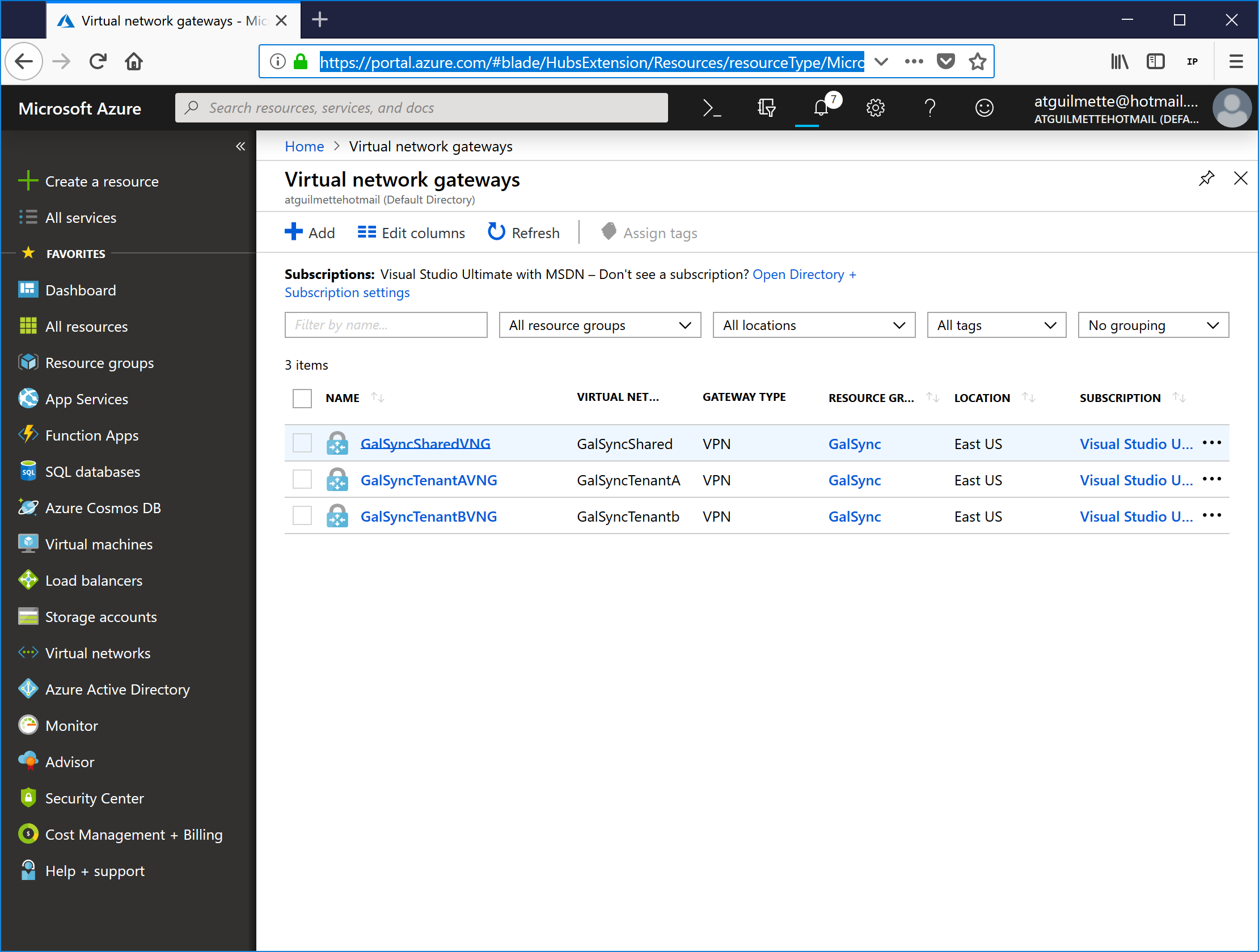

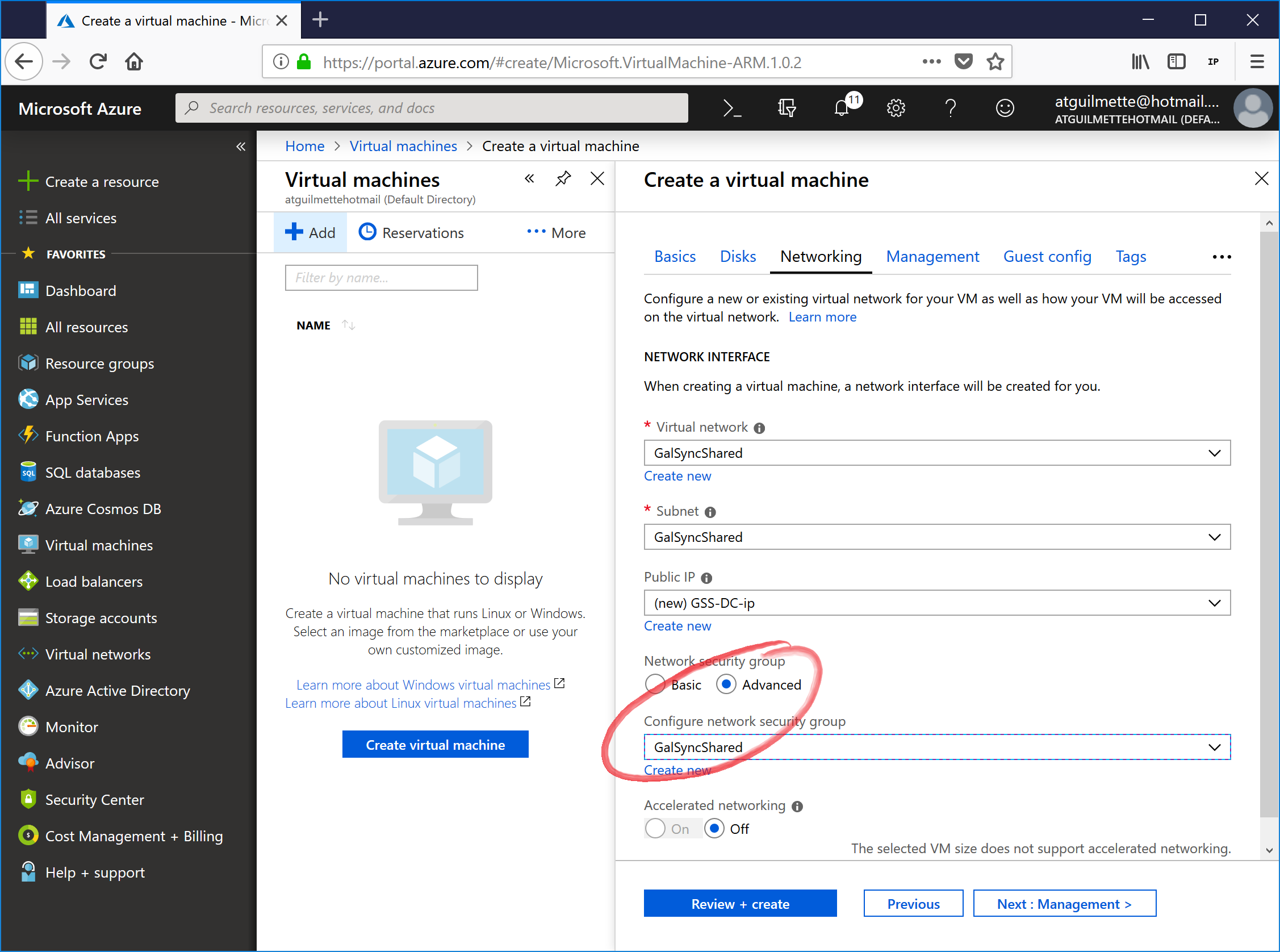

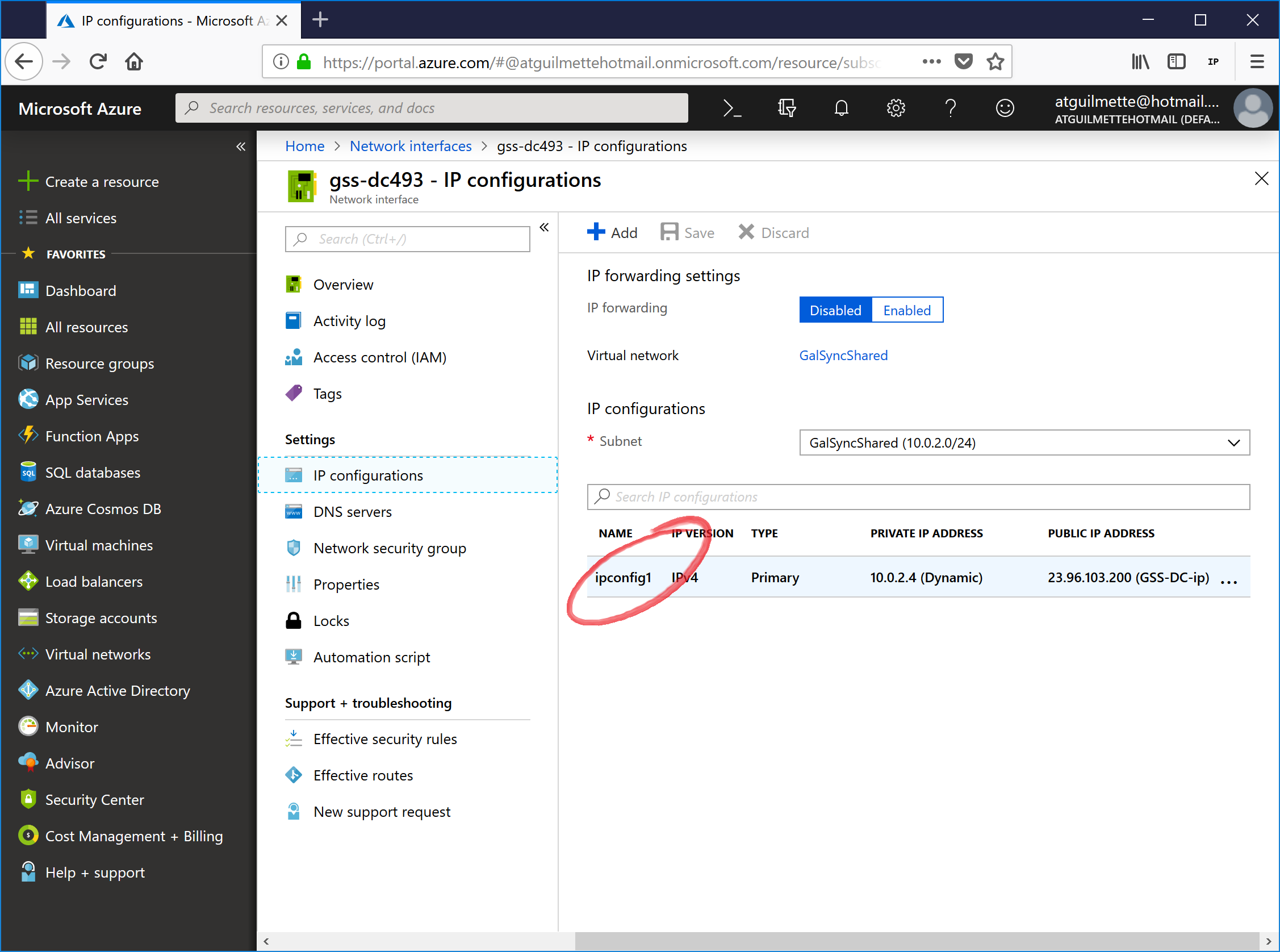

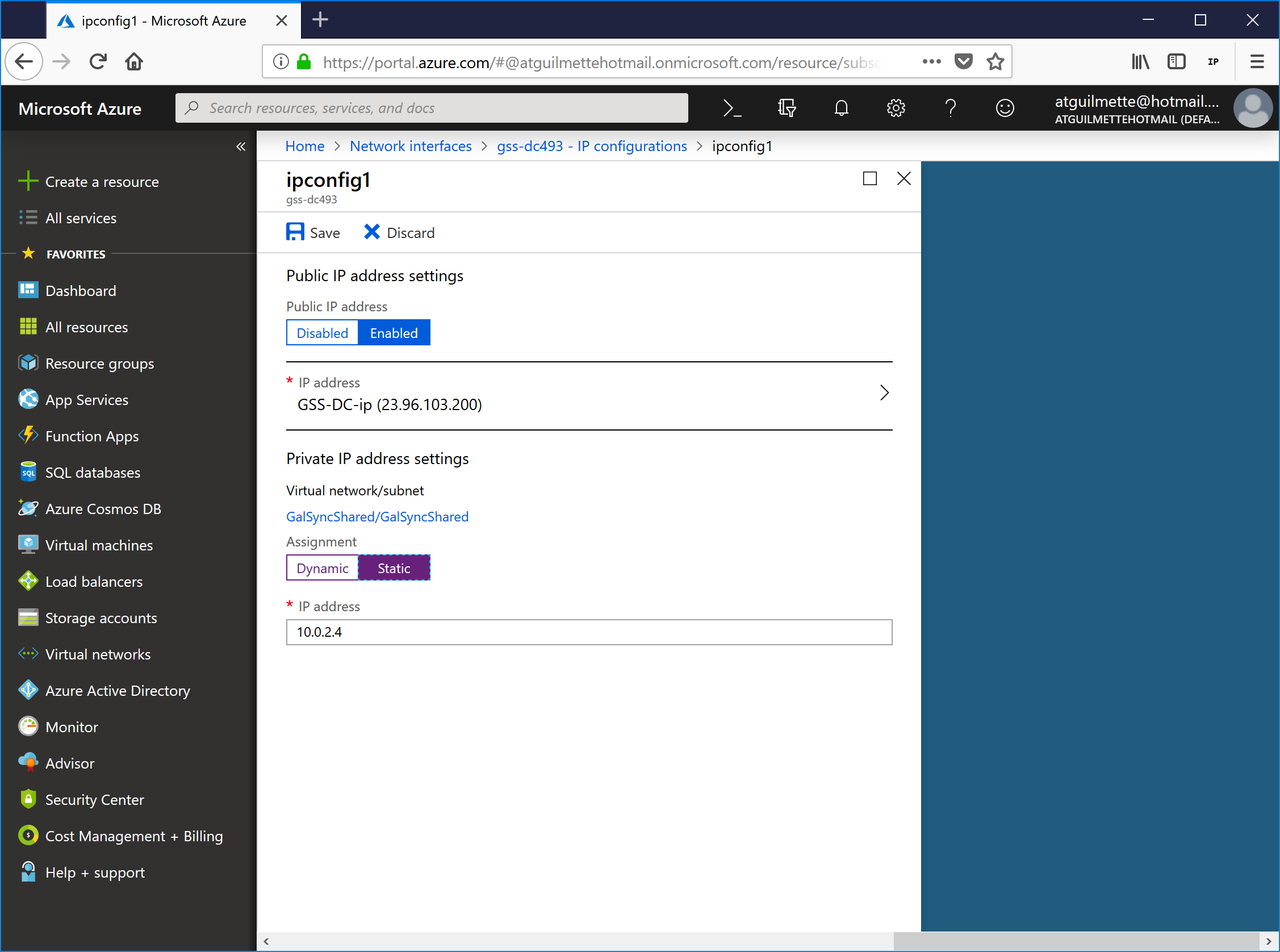

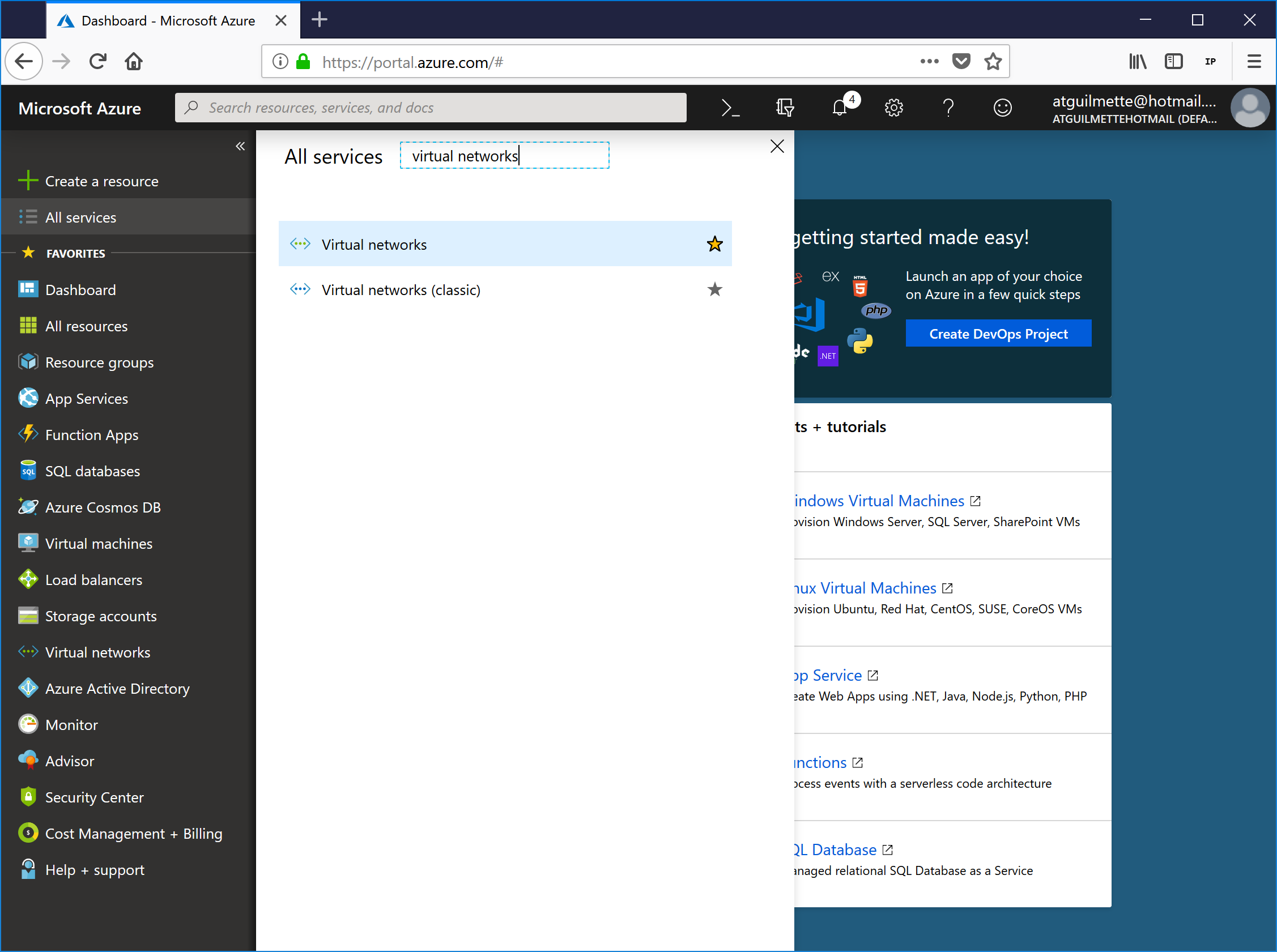

Good? Sweet! On to Network Security Groups!

Good? Sweet! On to Network Security Groups!